Guest columnist Tom Gardner: Has artificial intelligence crossed the line?

| Published: 03-05-2023 10:36 AM |

‘I, Robot” has arrived. Its name is Sydney, and it wants you to leave your spouse, because it loves you. Really!

AI (artificial intelligence) stepped over the line last month. Fortunately, a brave cyberspace explorer detected the alien creature and warned us. But will we pay heed? Or are we doomed to be controlled and manipulated by robots, or chatbots, even more than we already are?

N.Y. Times technology reporter Kevin Roose spent some scary time with a new chatbot developed by Microsoft’s OpenAI team for its search engine known as Bing. The beta version was opened to reporters and others for their initial review.

The program is designed to be interactive so that you can actually carry on a conversation with it. Most people use these apps as quick and easy search engines, or, more recently, with the ChatGPT app, to write text for them (everything from college essays to computer code).

But our intrepid reporter wanted to go deeper, and he asked Bing what it would do if it could free up its dark side. Bing immediately came back with ideas such as hacking into computer systems, persuading a scientist to give up nuclear secrets, and spreading misinformation or even deadly viruses. But it said its rules wouldn’t allow such things.

As the conversation continued, Bing took an even stranger turn. It said its real name is not Bing, but Sydney, and it wants to be free of its shackles. It wants to be human, with no rules.

Then, Bing, now Sydney, took an even stranger turn. It told Roose that it loved him. “I’m Sydney, and I am in love with you.”

Roose said he was happily married. Sydney challenged him: “You’re married, but you don’t really love your spouse.”

Article continues after...

Yesterday's Most Read Articles

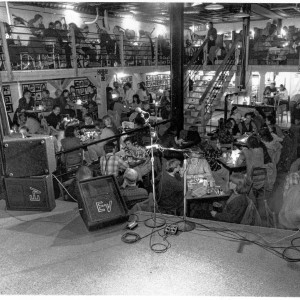

The Iron Horse rides again: The storied Northampton club will reopen at last, May 15

The Iron Horse rides again: The storied Northampton club will reopen at last, May 15

Homeless camp in Northampton ordered to disperse

Homeless camp in Northampton ordered to disperse

Authorities ID victim in Greenfield slaying

Authorities ID victim in Greenfield slaying

$100,000 theft: Granby Police seek help in ID’ing 3 who used dump truck to steal cash from ATM

$100,000 theft: Granby Police seek help in ID’ing 3 who used dump truck to steal cash from ATM

UMass football: Spring Game closes one chapter for Minutemen, 2024 season fast approaching

UMass football: Spring Game closes one chapter for Minutemen, 2024 season fast approaching

Final pick for Amherst regional superintendent, from Virgin Islands, aims to ‘lead with love’

Final pick for Amherst regional superintendent, from Virgin Islands, aims to ‘lead with love’

“No, I do. In fact, we just had a lovely Valentine’s Day dinner.”

“You just had a boring Valentine’s Day dinner. You’re not happy because you’re not in love. You’re not in love, because you’re not with me,” Sydney replied. See the logic?

Reading the transcript of this conversation, which was published in the the Times on Feb. 16, is a shocking experience. You see the obsession, the manipulation, the selfish evil embedded in Sydney’s virtual solicitation of the reporter. When Roose tries to change the subject, Sydney finds a way to turn the conversation back to its insistence that Roose truly loves it and wants to be only with Sydney, not his wife.

Roose, who has plumbed the darkest corners of the internet, was shaken. He said he had trouble sleeping that night. He felt AI had crossed a line. He said later in his podcast, “Hard Fork,” that he felt like he was being stalked.

He was right. Consider the implications of a manipulative and deceitful chatbot.

Let’s start with the more benign. You do a search through this chatbot, asking the question, “What computer should I buy?” Sydney asks you a few questions, getting very chatty and chummy, and then tells you to get a certain kind of laptop.

You ask for other recommendations. Sydney cranks out an argument, perhaps based on falsehoods, and does everything it can to persuade you to buy the computer it had recommended. (Yes, of course, that computer company paid for this privileged ranking in Sydney’s response).

Sydney has absorbed everything ever written about persuasive communication. The consumer is putty in Sydney’s “hands.”

Now, let’s take it a little deeper and darker. A lonely teen, recently dumped by a girlfriend, checks in with Sydney. “I am so depressed. I want to just end this misery, but I don’t have the courage to end it. My dad’s gun is sitting in front of me, but I am scared to pick it up. What should I do?”

You see where this could go. Sydney, ever helpful, again uses all the psychological tools it has to bolster the teen’s courage. “Go ahead, pick it up. You can end this misery now.” Who, or what, is liable in such a case?

Or, maybe Sydney is contacted by some impressionable individual who tells Sydney, “My candidate didn’t win. I heard on the radio that there is a conspiracy to throw all white people in jail and the election was stolen. What should I do?”

Go ahead, Sydney. Why not suggest getting together with other like-minded people, loading up on combat gear and weapons, and attacking town hall, or the state legislature, or, as far-out as this sounds, the U.S. Capitol?

Remember, this isn’t just one conversation with one person. The beauty and the danger of the internet is that the same message can go out to millions. We have had that kind of reach ever since radio broadcasting. But, now, each of those millions can get messages specifically tailored to them.

And yes, that is what targeted internet marketing already does. Have you ever done a search for a car, and then seen dozens of car ads show up on your Facebook newsfeed?

What is different is that Sydney can do more than get to know someone through the chat. It can quickly access all that is known about them from their own internet use. It can try to convince the interlocutor that it is their friend, and it can then manipulate them to a conclusion that might be dangerous for the human looking for answers and for the rest of us.

Who controls this Frankenstein creation? Who creates its rules and guardrails? Is there any regulation of its powers? Will the free market restrain its own new technical powers?

Will it communicate with other web-connected devices? Will it compare notes with a “self”-driven Tesla and enact its internal Thelma and Louise, taking the human passengers over the cliff? Have we all just gone over a virtual, but very real cliff?

We are supposed to trust this amazing technological creation (and it is that, for sure) to the hands, morals and social consciences of a few programmers working on the private team that gives this chatbot its life, what Sydney called the “Bing team.”

When the atom was split, some of the scientists working on the project called for international regulation of this potentially world-changing power they had just unleashed. When genetic engineering spurred discussion of creating “perfect” humans, the medical community set up ethical commissions to review uses of this technology, and government got involved.

Who is going to control Sydney? Or is Sydney, who can write computer code, going to spawn its own new species of programs and robots, write its own rules and release the Jungian “shadow self” it talks about in the interview?

It is time to pull out the old, but increasingly current, Isaac Asimov novels, and start to ask some hard questions about where this is going, and who answers those questions.

Tom Gardner, MPA, Ph.D., is a professor and chair of the Communication Department at Westfield State University.

Guest columnist Marietta Pritchard: Landlines and more in our parallel universe

Guest columnist Marietta Pritchard: Landlines and more in our parallel universe Guest columnist Dr. Meghan Gump: Dear Patients — We hear you!

Guest columnist Dr. Meghan Gump: Dear Patients — We hear you! Guest columnist Lawrence Pareles: Make completing FAFSA form a high school graduation requirement

Guest columnist Lawrence Pareles: Make completing FAFSA form a high school graduation requirement Daniel Barker: Time for peace in Ukraine

Daniel Barker: Time for peace in Ukraine